Some interesting findings from user research for the Zappa project (so far!)

One of the things about working openly is, fairly obviously, sharing your work as you go. This can be difficult for many reasons, not least because of the human tendency toward narrative, to completed stories with start, middle, and end.

The value of resisting this tendency and sitting in ambiguity for a while is that allows for slow hunches to form and serendipitous connections to be made. So it is with user research I’m doing as part of the Zappa project for the Bonfire team. We need time to talk to lots of different types of people who meet our criteria, and to spend some time reflecting on what they’ve told us.

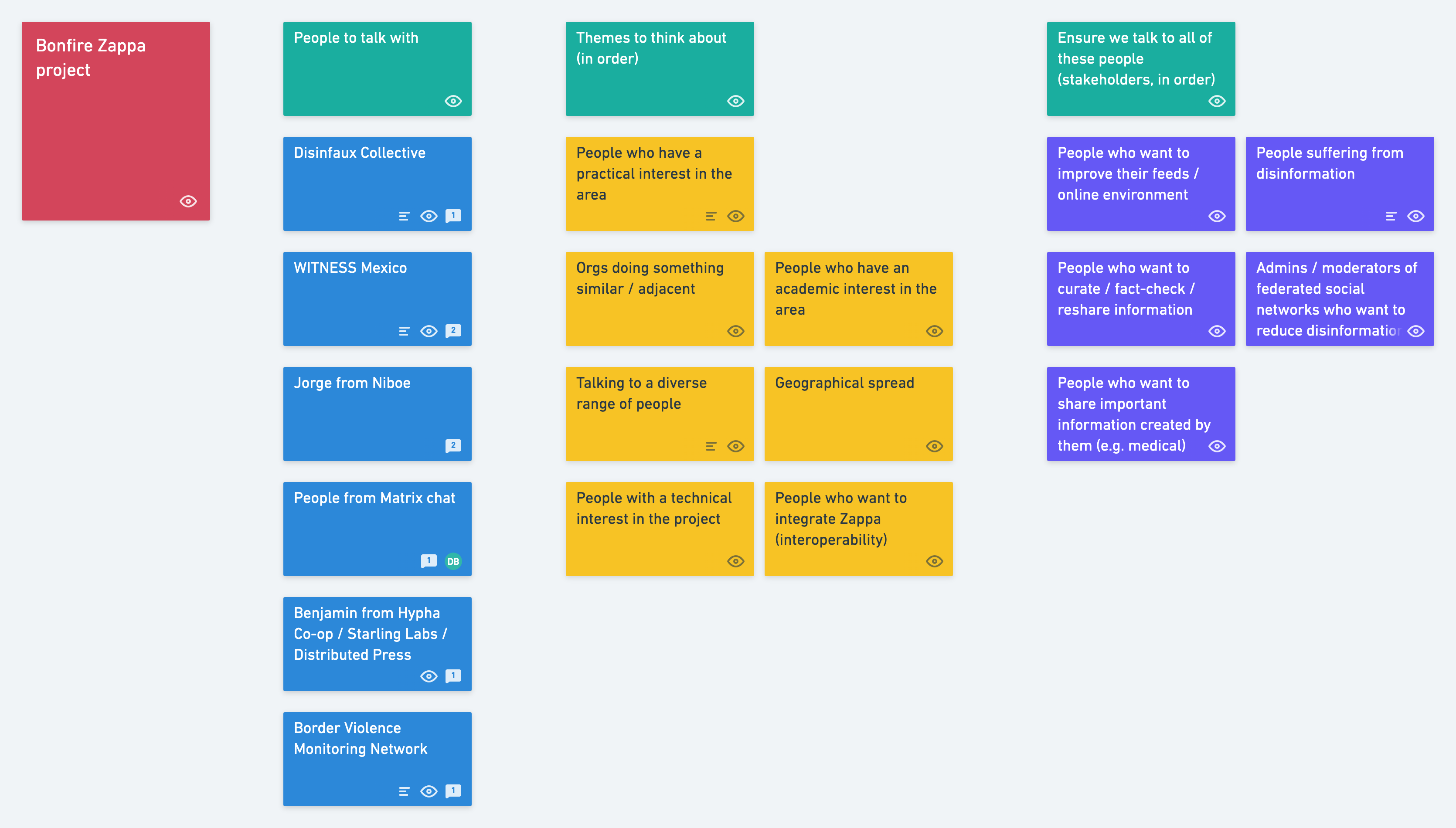

As I wrote in my previous post about the project, we’d identified some of the following:

- a list of people we can/should speak with

- themes of which we should be aware/cognisant

- groups of people we should talk with

Inevitably, since this initial work, we’ve come up with some obvious gaps in the people we should speak to (UX designers!). The people we’ve spoken with have recommended other people to contact as well as avenues of enquiry to follow. This is such an interesting topic that we need to be careful that the project doesn’t grow legs and run away with us…

10 interesting things people have told us so far

We haven’t started synthesising any of what our user research participants have said so far, but as we’re around halfway through the process of conducting interviews, I thought it might be worth sharing 10 interesting things they’ve told us. These are not any any particular order.

- Countering misinformation is time-consuming — to fact-check articles takes time and by the time the result is published the majority of the people who were going to read it have done so anyway.

- Chat apps — public social networks are blamed for not dealing with mis/disinformation but some of the most problematic stuff is being shared via messaging services such as WhatsApp and Telegram.

- Difference between human and bot accounts — it’s possible to reason with a human being but impossible to do with a bot account.

- Metaphor of adblock list — a way of reducing the burden of moderation on administrators and moderators* of a federated social network instance by creating a more systematised version of something like the #Fediblock hashtag.

- Subscribing to moderator(s) — delegating moderation explicitly to another user, perhaps by automatically blocking/muting whatever they do.

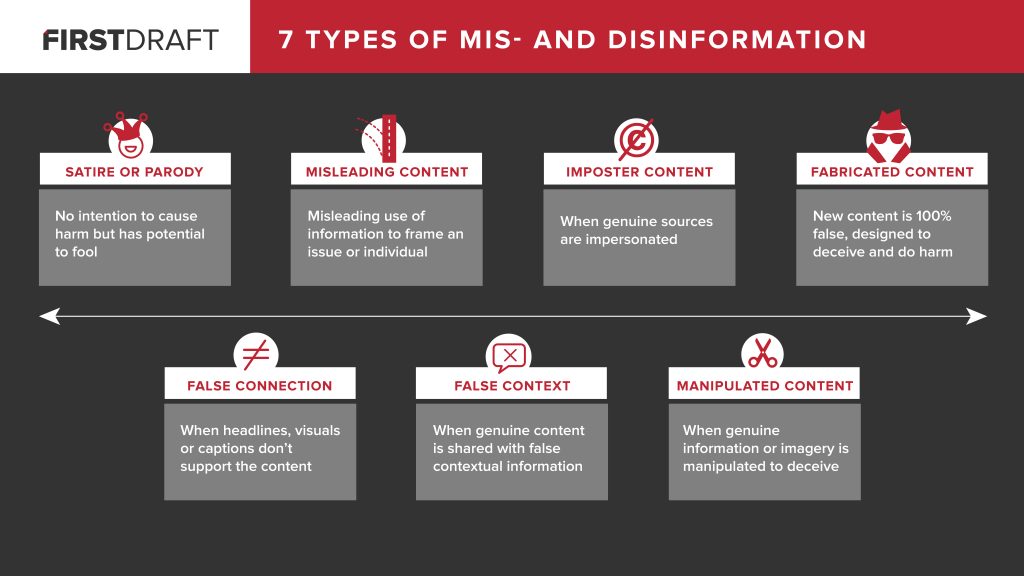

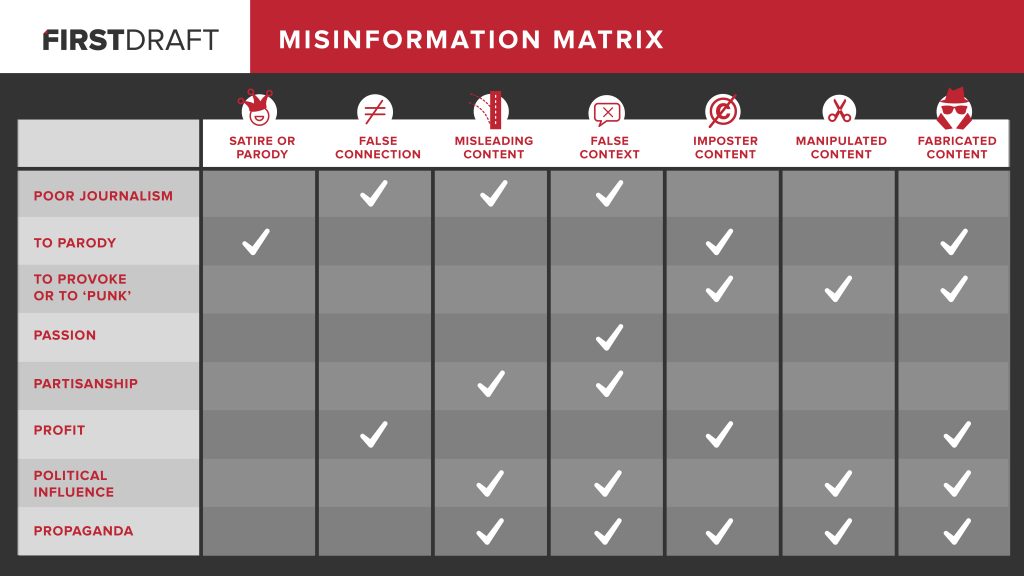

- Different categories of approaches — for example, reputational solutions that deal with trusted parties, technical solutions that prove something hasn’t been tampered with, and process-based solutions which make transparent the context in which the content was created and transmitted.

- Visualising connections — visualising the social graph could make it easier to spot outlier accounts which may be less trusted than those that lots of your other contacts are connected to.

- Fact-checking platforms can be problematic — they promote an assumption that there is a single ‘Truth’ and one version of events. They can be useful in some instances but also be used to present a distorted view of the world.

- Frictionless design — by ‘decomplexifying’ the design of user interfaces we hide the system behind the tool and the trade-offs that have been made in creating it.

- Disappearing content — content that no longer exists can be a problem for derivative works / articles / posts that reference and rely on it to make valid claims.

It’s been fascinating to see the different ways that people have approached our conversations, whether from a technical, design, political, scientific, or philosophical perspective (or, indeed, all five!)

Next steps

We’ve still got some people to talk with next week, but we are always looking to ensure a diverse range of user research participants with a decent geographical spread. As such, we could do with some help identifying people located in Asia (yes, the whole continent!) who might be interested in talking about their experiences, as well as people from minority and historically under-represented backgrounds in tech.

In addition, we could also do with talking with people who have suffered from mis/disinformation, any admins or moderators of federated social network instances, and UX designers who have a particular interest in mis/disinformation. You can get in touch via the comments below or at: [email protected]