Defederation and governance processes

I’ve noticed this week some Mastodon instances ‘defederating’ not only from those that are generally thought of to be toxic, but also of large, general-purpose instances such as mastodon.social. This post is about governance processes and trying to steer a way between populism and oligarchy.

The first thing I should say in all of this, is that I’m a middle-aged, straight, white guy playing life on pretty much the easiest difficulty level. So I’m not commenting in this post about any specific situation but rather zooming out to think about this on a wider scale.

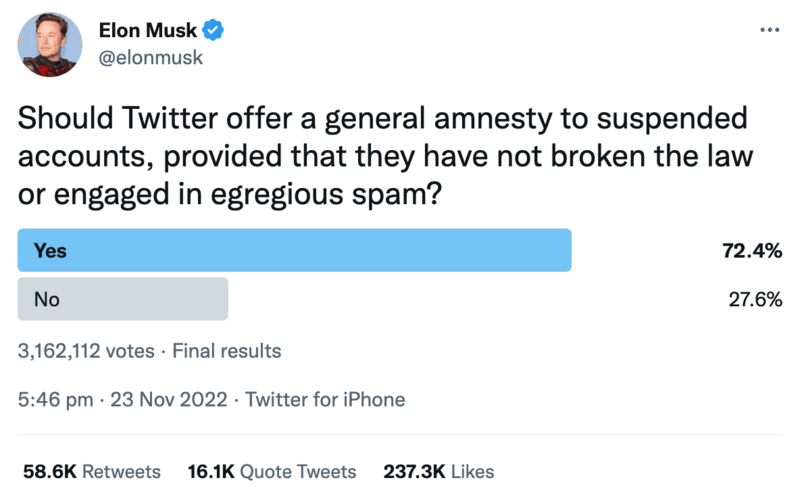

What I’ve seen, mainly via screenshots as I rarely visit Twitter now except to keep the @WeAreOpenCoop account up-to-date, is that Elon Musk has run some polls. As others have commented, this is how a Roman Emperor would make decisions: through easy-to-rig polls that suggest that an outcome is “the will of the people”.

This is obviously an extremely bad, childish, and dangerous way to run a platform that, until recently, was almost seen as infrastructure.

On the other side of the spectrum is the kind of decision making that I’m used to as a member of a co-op that is part of a wider co-operative network. These daily decisions around matters large and small requires not necessarily consensus, but rather processes that allow for alignment around a variety of issues. As I mentioned in my previous post, one good way to do this is through consent-based decision making.

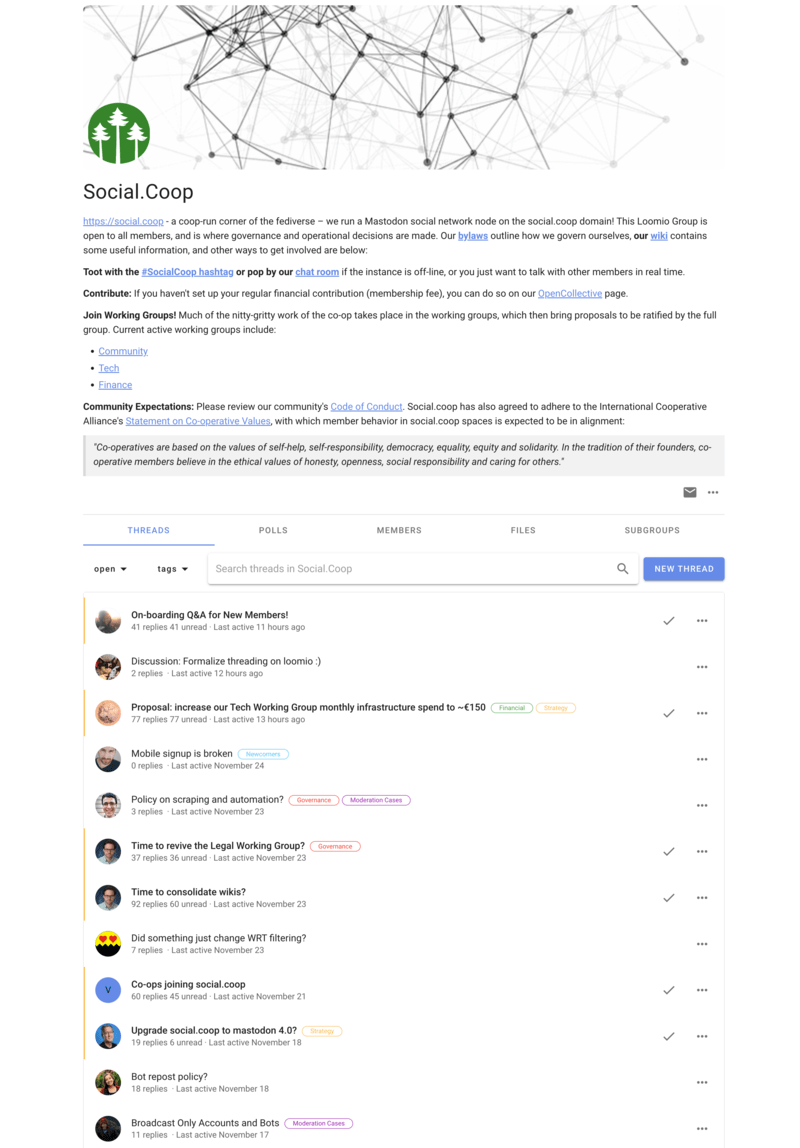

Using Loomio, the social.coop instance that I currently call home on the Fediverse, makes decisions in a way that is open for everyone to view — and also for members of the instance to help decide. It’s not a bad process at all, but a difficult one to scale — especially when rather verbose people with time on their hands decide to have An Opinion. It also happens in a place (Loomio) other than that which the discussion concerns (Mastodon).

So when I had one of my regular discussions with Ivan, one of the Bonfire team, I was keen to bring it up. He, of course, had already been thinking about this and pointed me towards Ukuvota, an approach which uses score voting to help with decision making:

To “keep things the way they are” is always an option, never the default. Framing this option as a default position introduces a significant conservative bias — listing it as an option removes this bias and keeps a collective evolutionary.

To “look for other options” is always an option. If none of the other current options are good enough, people are able to choose to look for better ones — this ensures that there is always an acceptable option for everyone.

Every participant can express how much they support or oppose each option. Limiting people to choose their favorite or list their preference prevents them from fully expressing their opinions — scoring clarifies opinions and makes it much more likely to identify the best decision.

Acceptance (non-opposition) is the main determinant for the best decision. A decision with little opposition reduces the likelihood of conflict, monitoring or sanctioning — it is also important that some people actively support the decision to ensure it actually happens.

The examples given on the website are powerful but quite complicated, which is why I think there’s immense power in the default. To my mind, democratic decision making is the kind of thing that you need to practise, but which shouldn’t become a burden.

I’m hoping that after the v1.0 release of Bonfire, that one of the extensions that can emerge is a powerful way of democratic governance processes being available right there in the social networking tool. If this were the case, I can imagine decisions around instance-blocking to be able to be made in a positive, timely, and democratic manner.

Watch this space! If you’re reading this and are involved in thinking about these kinds of things for projects you’re involved with, I’d love to have a chat.