Sticks and stones (and disinformation)

I guess like most people growing up in the 1980s and 1990s, the phrase “sticks and stones may break my bones, but words will never hurt me” was one I heard a lot. Parroted by parents and teachers alike, the sentiment may have been fairly unproblematic, but it’s a complete lie. In truth, whereas broken bones may heal relatively quickly, for some people it can take years of therapy to get over things that they experience during their formative years.

This post is about content moderation and is prompted by Elon Musk’s purchase of Twitter, which he’s promised to give a free speech makeover. As many people have pointed out, he probably doesn’t realise what he’s let himself in for. Or maybe he does, and it’s the apotheosis of authoritarian nationalism. Either way, let’s dig into some of the nuances here.

Here’s a viral video of King Charles III. It’s thirteen seconds long, and hilarious. One of the reasons it’s funny is that it pokes fun at monarchy, tradition, and an older, immensely privileged, white man. It’s obviously a parody and it would be extremely difficult to pass it off as anything else.

While I discovered this on Twitter, it also did the rounds on the Fediverse, and of course on chat apps such as WhatsApp, Signal, and Telegram. I shared it with others because it reflects my anti-monarchist views in a humorous way. It’s also a clever use of deepfake technology — although it’s not the most convincing example. I can imagine other people, including members of my family, not sharing this video partly because every other word is a profanity, but mainly because it undermines their belief in the seriousness and sanctity of monarchy.

In other words, and this is not exactly a deeply insightful point but one worth making nevertheless, the things we share with one another are social objects which are deeply contextual. (As a side note, this is why cross-posting between social networks seems so janky: each one has its own modes of discourse which only loosely translate elsewhere.)

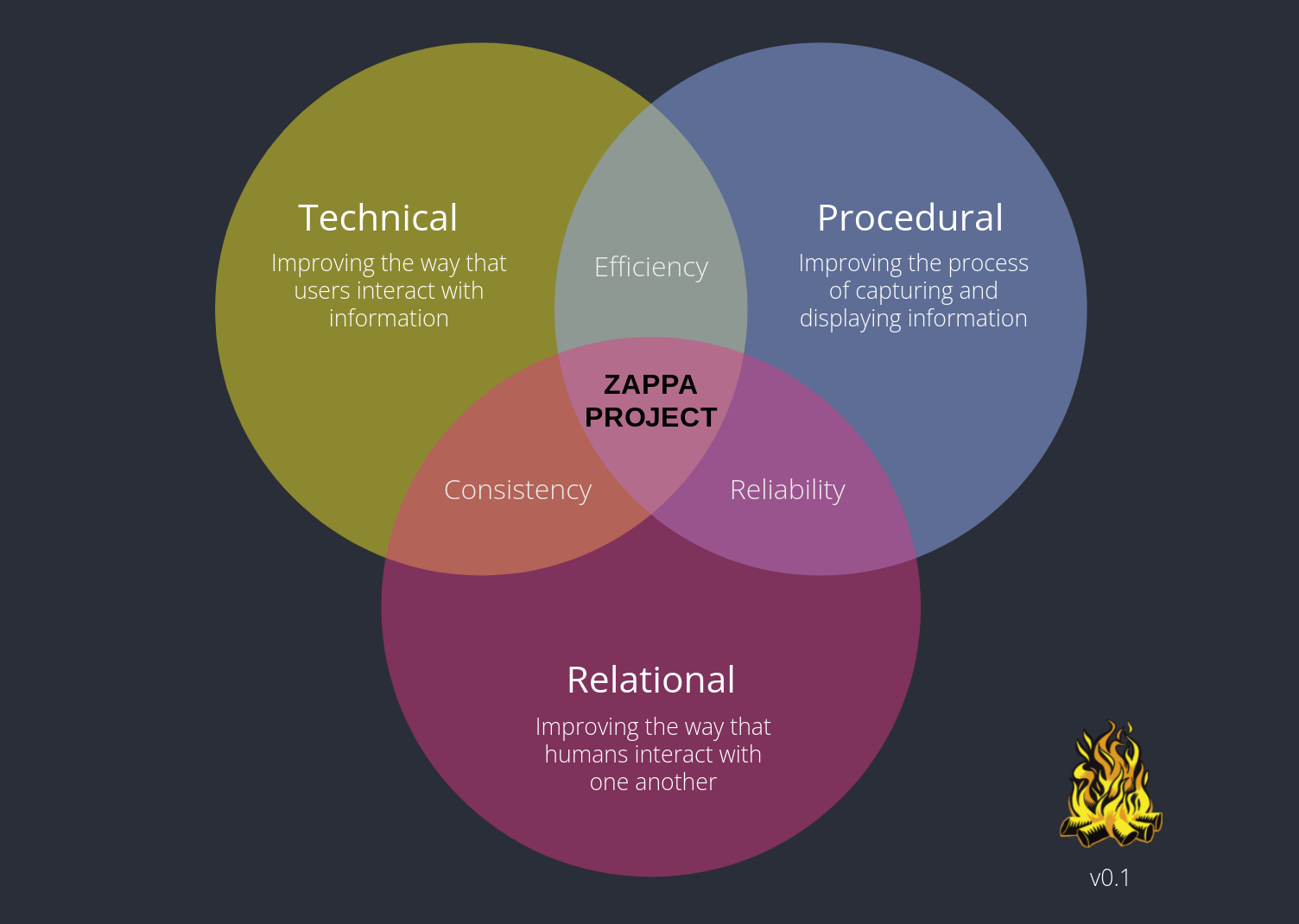

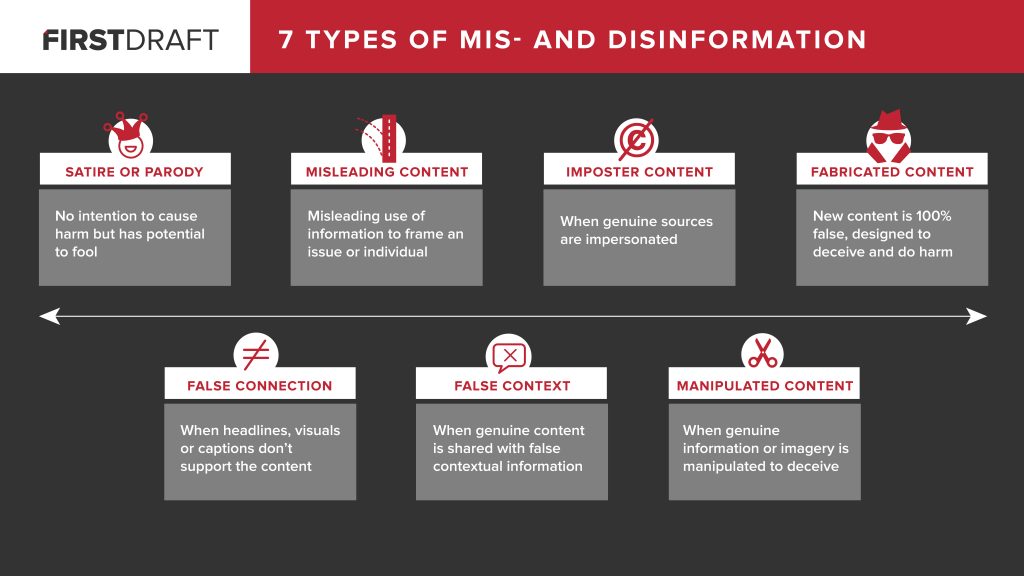

A few months back I wrote a short report for the Bonfire team’s Zappa project. The focus was on disinformation, and I used First Draft’s 7 Types of Mis- and Disinformation spectrum as a frame.

As you can see, ‘Satire or Parody’ is way over on the left side of the spectrum. However, as we move to the right, it’s not necessarily the content that shifts but rather the context. That’s important in the next example I want to share.

Unlike the previous video, this one of Joe Biden is more convincing as a deepfake. Not only is it widescreen with a ‘news’ feel to it, the voice is synthesised to make it sound original, and the lip-syncing is excellent. Even the facial expression when moving to the ‘Mommy Shark…’ verse is convincing.

It is, however, still very much a parody as well as a tech demo. The video comes from the YouTube channel of Synthetic Voices, which is a “dumping ground for deepfakes videos, audio clones and machine learning memes”. The intentions here therefore may be mixed, with some videos created with an intent to mislead and deceive.

Other than the political implications of deepfakes, some of the more concerning examples are around deepfake porn. As the BBC has reported recently, while it’s “already an offence in Scotland to share images or videos that show another person in an intimate situation without their consent… in other parts of the UK, it’s only an offence if it can be proved that such actions were intended to cause the victim distress.” Trying to track down who created digital media can be extremely tricky at the best of times, and even if you do discover the culprit, they may be in a basement on the other side of the world.

So we’re getting to the stage where right now, with enough money / technological expertise, you can pretend anyone said or did anything you like. Soon, there’ll be an app for it. In fact, I’m pretty sure I saw on Hacker News that there’s already an app for creating deepfake porn. Of course there is. The genie is out of the bottle, so what are we going to do about it?

While I didn’t necessarily foresee deepfakes and weaponised memes, a decade ago in my doctoral thesis I did talk about the ‘Civic’ element as one of the Eight Essential Elements of Digital Literacies. And then in 2019, just before the pandemic, I travelled to New York to present on Truth, Lies, and Digital Fluency — taking aim at Facebook, who had a representative in the audience.

The trouble is that there isn’t a single way of preventing harms when it comes to the examples on the right-hand side of First Draft’s spectrum of mis- and disinformation. You can’t legislate it away or ban it in its entirety. It’s not just a supply-side problem. Nor can you deal with it on the consumption side through ‘digital literacy’ initiatives aiming to equip citizens with the mindsets and skillsets to be able to detect and deal with deepfakes and the like.

That’s why I think that the future of social interaction is federated. The aim of the Zappa project is to develop a multi-pronged approach which empowers communities. That is to say, instead of content moderation either being a platform’s job (as with Twitter or YouTube) or an individual’s job, it becomes the role of communities to deem what they consider problematic.

Many of those communities will be run by a handful of individuals who will share blocklists and tags with admins and moderators of other instances. Some might be run by states, news organisations, or other huge organisations and have dedicated teams of moderators. Still others might be run by individuals who decide to take all of that burden on themselves for whatever reason.

There are no easy answers. But conspiracy theories have been around since the dawn of time, mainly because there really are people in power doing terrible things. So yes, we need appropriate technological and sociological approaches to things which affect democracy, mental health, and dignity. But we also need to engineer a world where billionaires don’t exist, partly so that an individual can’t buy an (albeit privatised) digital town square for fun.

One thing’s for sure, if Musk gets his way, we’ll be able to test the phrase “sticks and stones may break my bones…” on a new whole generation. Perhaps show them the Fediverse instead?

Main image created using DALL-E 2 (it seemed appropriate!)