Exploring the sweet spot for Zappa project approaches to misinformation

While we know that misinformation is not a problem that can ever be fully ‘solved’, it is important to try and reduce its harms. Last week, we published the first version of a report based on user research as part of the Zappa project, an initiative from the Bonfire team.

This week, I’ve narrated a Loom video giving an overview of the mindmap embedded in the report. This was requested by Ivan, as he found that the way that I explain it captures some nuance that perhaps isn’t in the report (which is more focused on recommendations).

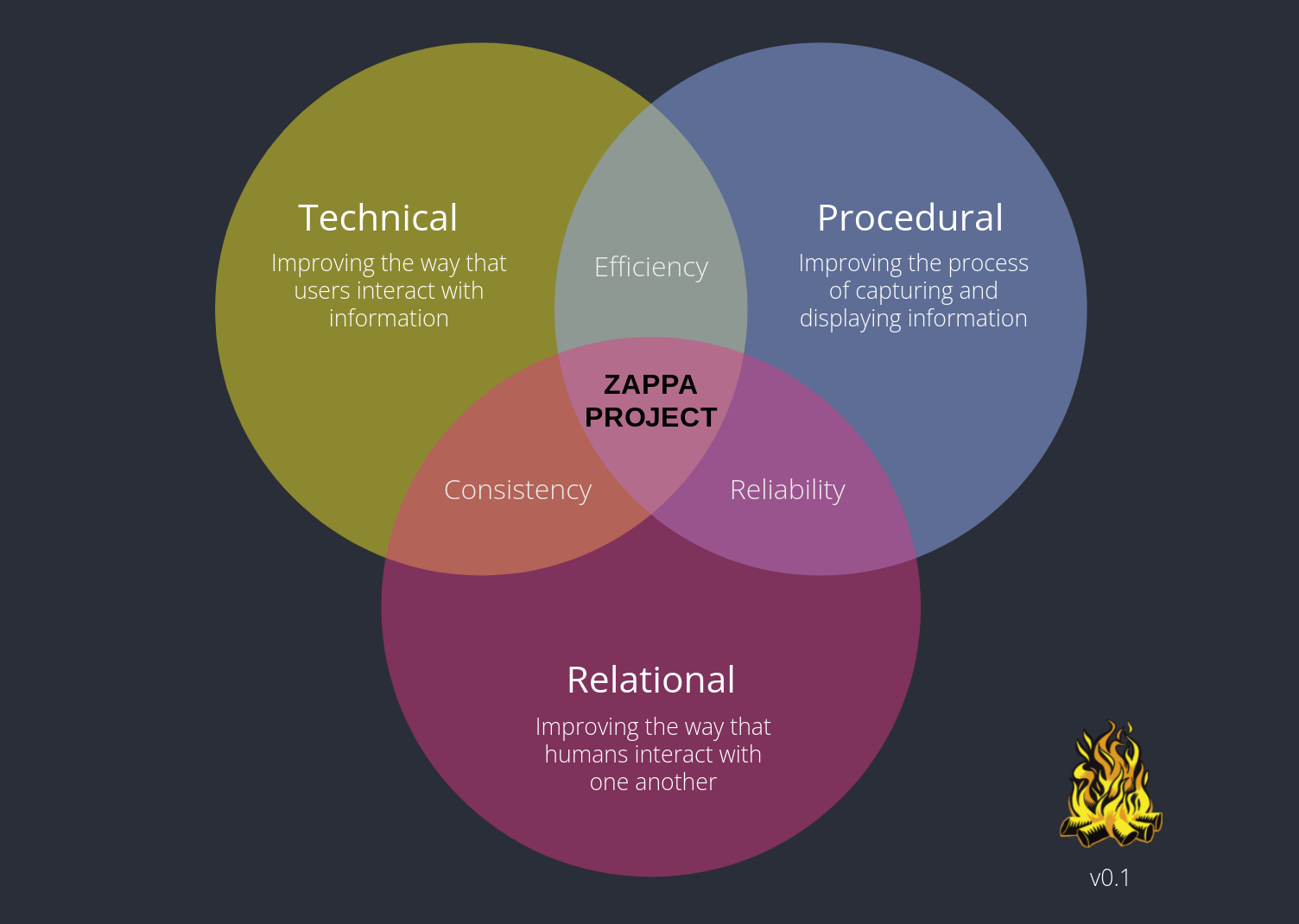

Another thing I was tasked with this week was creating a Venn diagram from the three types of approaches that could be taken for the technical development for the Zappa project. These were slightly tweaked from suggestions made by one of our user research participants. As you can see in the above diagram, these are:

- Technical — improving the way that users interact with information

- Procedural — improving the process of capturing and displaying information

- Relational — improving the way that humans interact with one another

It’s unlikely that any one approach would sit squarely as being only one type of approach. For example, spending time thinking about the way that information is presented to users and allowing them to control that sits right in the middle of all three.

There are three overlaps other than the one right in the middle. These are:

- Technical / Procedural — we’ve currently labelled this intersection as Efficiency as using technical approaches to improve processes usually makes them more efficient. This might include making it easier to block certain types of posts, for example.

- Procedural / Relational — we’ve labelled this intersection as Reliability because process when considered in terms of relationships is often focused on repeatable patterns. This might include, for example, being able to validate that the account you’re interacting with hasn’t been hijacked.

- Relational / Technical — we’ve used the label Consistency for this one as one of the things we found from our research is that users are often overwhelmed by information. We can do something about that, so this might include helping users feel in charge of their feeds to help avoid context collapse or aesthetic flattening.

You will notice the version number appended to this diagram is ‘v0.1’. It might be that we haven’t found the right words for these overlaps. It might be that some are more important than others. We’d love feedback from anyone paying attention to the Zappa project, whether you’ve been following the work around Bonfire since the beginning, or whether this is the first you’re hearing of it.

If it helps, feel free to grab the Google Slides original of the above Venn diagram, or comment below on a) what you think is good, b) anything you have questions about, or c) anything that concerns you.