TB871: Reflections on TMA02 and looking forward to Block 5 (SSM)

Note: this is a post reflecting on one of the modules of my MSc in Systems Thinking in Practice. You can see all of the related posts in this category.

After almost two weeks off, I’m back in the library continuing with my studies. Given the lack of downtime — only one week between modules — I’m wondering whether I’m going to be able to sustain this for three years to gain the full MSc.

I gained 85% for my first tutor-marked assignment (TMA01) whereas I received 75% for my most recent one (TMA02). As I said in an email to my tutor, the word count was so restrictive that I found it really difficult to get into much depth with my answers. The third question, for example, which called for an evaluation of particular tools for the purposes of making strategy, was limited to 300 words! Given that students are now penalised for going even 1% over the word count, I found this very frustrating.

I didn’t disagree with any feedback from my tutor, but some of what she suggested and said was missing was actually included in a previous version of the document. I’m all for concise answers, but the word limits seem somewhat arbitrary.

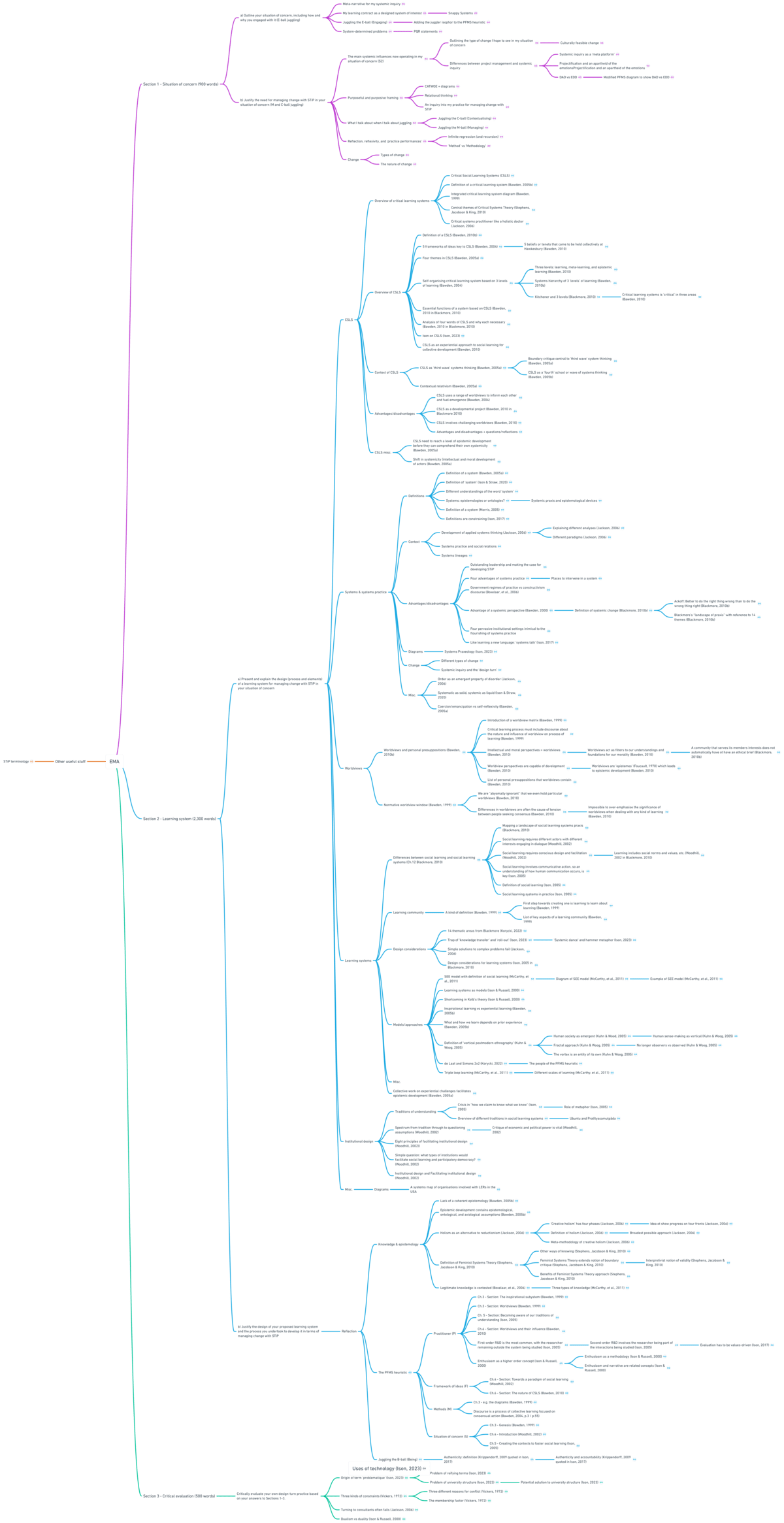

Moving on, I’m entering the Block 5 Tools Stream which covers Soft Systems Methodology (SSM). This is something I covered as part of TB872, so here are some posts I wrote about SSM as part of that module:

Although I never liked it as a teacher when students were really focused on what was in the test rather than on the curriculum, I’m going to have to be a bit strategic when it comes to TMA03 and my End of Module Assessment (EMA). It looks like TMA03 has exactly the same question structure as TMA02, so I’ve asked for model answers for the latter from my tutor to help structure my answers for the former.

Given that the questions ask about sequential parts of the module, I might actually try a different strategy and answer them as I finish each block, rather than wait to answer them all together. We’ll see.