TB871: Managing variety using amplifiers and attenuators

Note: this is a post reflecting on one of the modules of my MSc in Systems Thinking in Practice. You can see all of the related posts in this category.

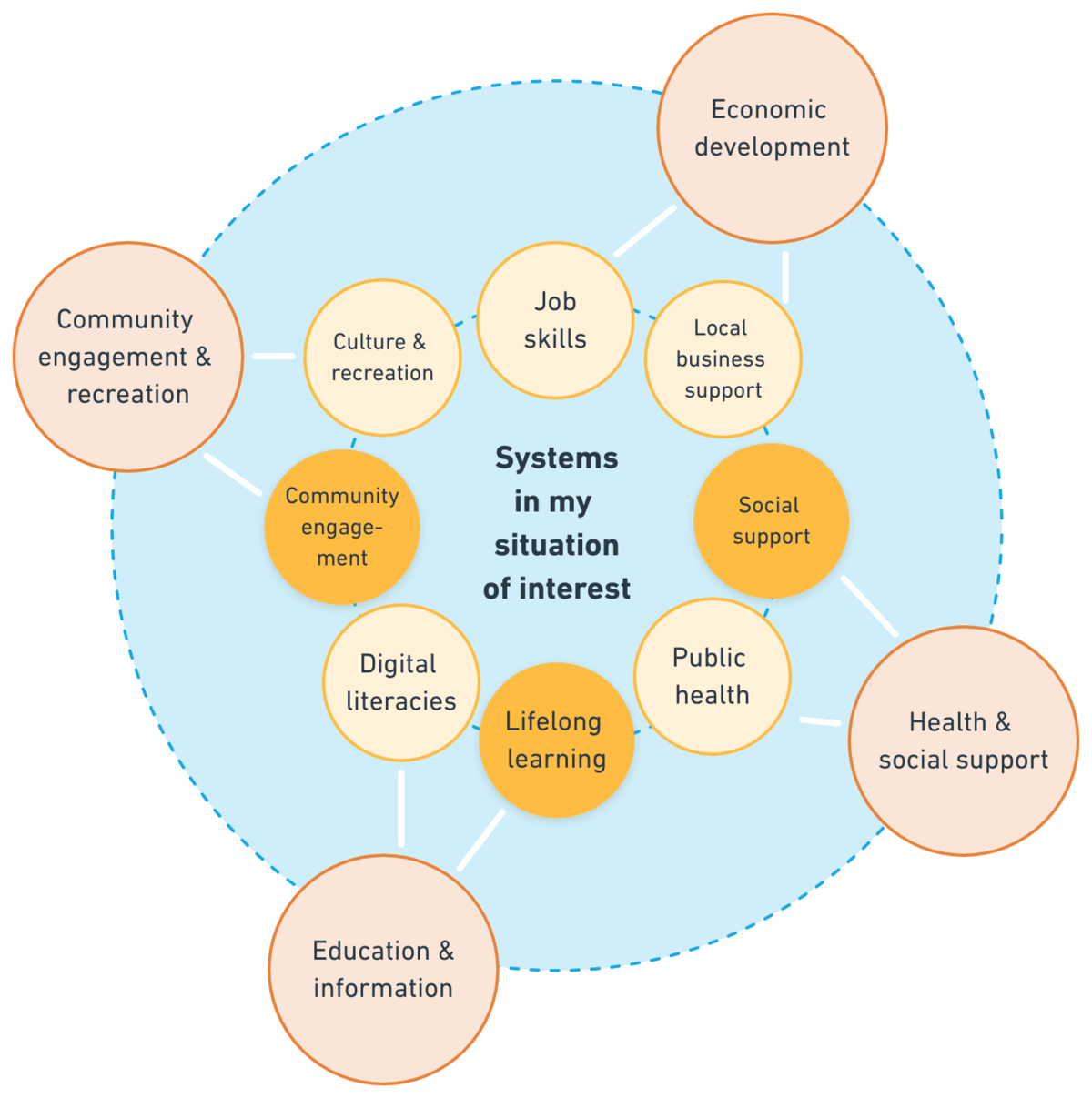

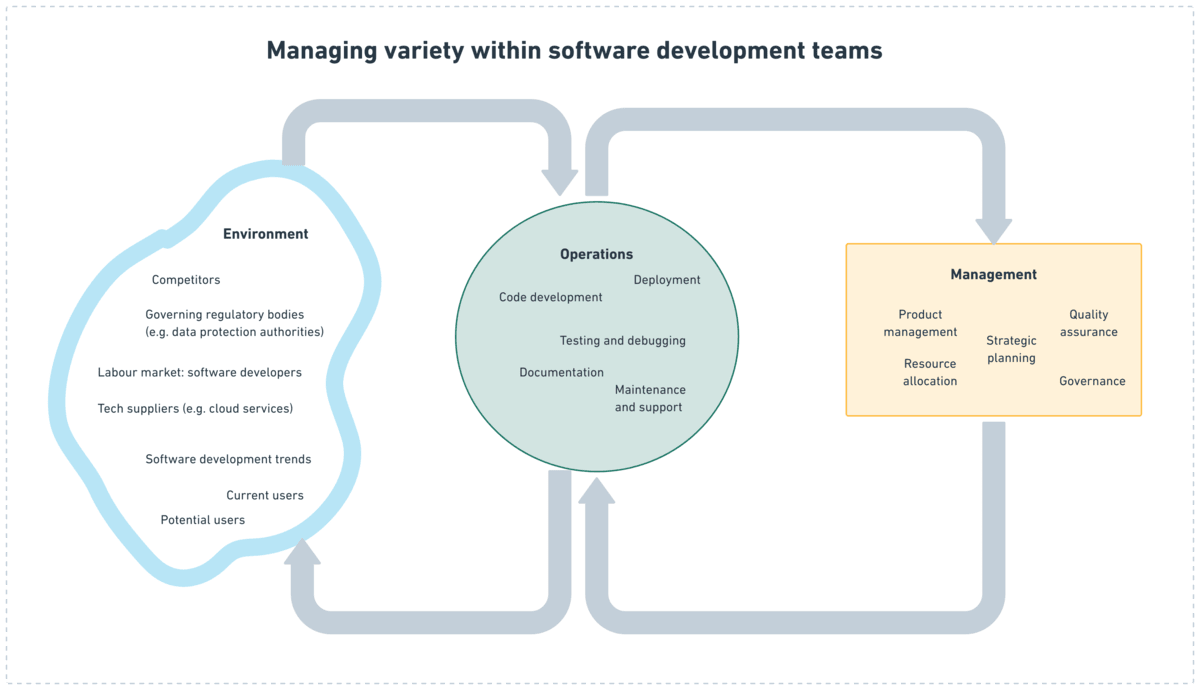

Balancing autonomy and control is crucial for maintaining a viable system. This balance is especially important in a software development team, where management seeks to guide the complex processes of development, testing, and deployment effectively. The diagram below illustrates this within a software development environment, with the rest of this post exploring how ‘amplifiers’ and ‘attenuators’ help manage the variety in such systems, ensuring effectiveness and coherence.

Autonomy and Control

Autonomy refers to the degree of freedom and self-direction possessed by parts of a system. Control is the ability of the overarching system to direct these autonomous parts towards a common goal.

According to Ashby’s Law of Requisite Variety, for a system to be viable, the variety (i.e. complexity) in the controller (management) must match the variety in the system being controlled (operations). Achieving this balance is often challenging due to the inherent complexity and unpredictability of the wider environment of software development.

Amplifiers and Attenuators

To manage this complexity, systems use amplifiers and attenuators. These help align the variety between management and operations in an attempt to enable effective control without stifling autonomy.

Amplifiers increase the system’s capacity to handle variety by making use of additional resources and/or decentralising decision-making. In the software development example, this could include:

- Automated testing: using automated testing tools increases the variety of tests that can be conducted without overwhelming the developers.

- Flexible work schedules: implementing flexible working hours allows the team to adapt to varying workloads more effectively.

- Delegated authority: empowering team leaders or senior developers to make decisions on the spot can address issues promptly, enhancing responsiveness and reducing the burden on upper management.

Attenuators reduce the variety that the management system needs to handle. Again, in our software development example, this might look like:

- Segmented development phases: instead of tackling the entire development process at once, projects can be broken down into phases like development, testing, and deployment, reducing the amount of complexity involved.

- Standardised development frameworks: implementing frameworks like Agile or Scrum can help ensure consistency and predictability, which in turn can simplify management oversight.

- Departmental organisation: dividing the team into specialised units (e.g., front-end, back-end, quality assurance) helps manage complexity by narrowing the focus of each group.

A balancing act

The key to a viable system lies in the delicate balance between autonomy and control. Too much control can stifle creativity and responsiveness, while too much autonomy can lead to chaos and misalignment with organisational goals.

Amplifiers and attenuators are helpful tools in achieving this balance, as they ensure that management can effectively guide operations without being overwhelmed by complexity, and that operational units have the freedom to adapt and respond to immediate challenges.

References

- The Open University (2020) ‘3.3.2 Applying variety: autonomy versus control’, TB871 Block 3 Tools stream [Online]. Available at https://learn2.open.ac.uk/mod/oucontent/view.php?id=2261487§ion=4.2 (Accessed 21 June 2024).